7 notable NDC London 2016 talks

This post is a review of some talks from NDC London 2016 that I found interesting.

7 notable NDC London 2016 talks

I finally went through all the talks I bookmarked and thought it would be nice to provide an overview of the most notable ones. The first version of this post was entitled as “Best NDC 2016 talks” but it looked too clickbaity to me, so I decided to change the title to a more humble one.

Here’s my disclaimer. While I went through a lot of talks (probably half of them), I still could miss some gems whose title didn’t seem appealing enough to me. Also, many speakers didn’t prepare anything new for this conference and came with an existent piece. I tended to rate repeated talks lower. The videos go in no particular order.

Autonomy, Connection, and Excellence; The Building Blocks of a Knowledge Work Culture by Michael Norton.

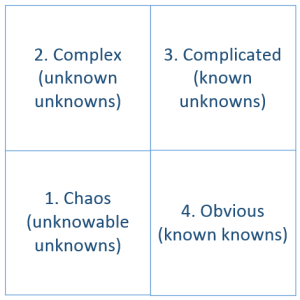

The speaker talks about a variation of the Cynefin Framework. According to this framework, all problem domains fall into one of the following categories:

Cynefin Framework

Cynefin Framework

Takeaway #1. Chaos and Obvious domains are good for central management. In Complicated and Complex domains, the management should be given away to the executors.

Takeaway #2. Most practices that are taught in MBAs come from Chaos and Obvious quadrants and are not applicable to Complicated and Complex.

Takeaway #3. Extrinsic (external) motivators work well in Chaos and Obvious. Intrinsic (internal) motivators work in Complex and Complicated domains.

This point corresponds to many earlier studies which showed that the traditional ways of motivation don’t work in knowledgeable professions. Here’s a great book on that topic: Management 3.0: Leading Agile Developers, Developing Agile Leaders.

Takeaway #4. The more important a decision is, the closer it should reside to the actual implementers of this decision.

This is also a point which has a lot of evidence in our profession. The closer you are to the task at hand, the more information you have about that task. It also correlates with the notion of an architect who doesn’t code but has a right (and uses this right often) of making any technical decision in the project. Not a healthy situation to be in.

Takeaway #5. Prefer diversity of thought over the diversity of appearance.

Speaks of itself. Don’t conflate these two types of diversity.

Saying “Goodbye” to DNX and “Hello!” to the .NET Core CLI by Damian Edwards & David Fowler.

This talk finally helped me get my head around the new Core CLR thing and establish the terminology.

Takeaway #1. .NET Core consists of the following:

- CoreFX: a new BCL;

- CoreCLR: a new, more light-weight, cross-platform CLR (with GC and other usual CLR features);

- CoreRT: a new native compiler. Compiles .NET applications to native machine code. The resulting executable includes all required CoreFX and CoreCLR parts and doesn’t need anything to be executed;

- .NET Core CLI: a toolchain to build .NET Core applications (kind of command-line utilities). Gives a single model for building .NET applications on all platforms.

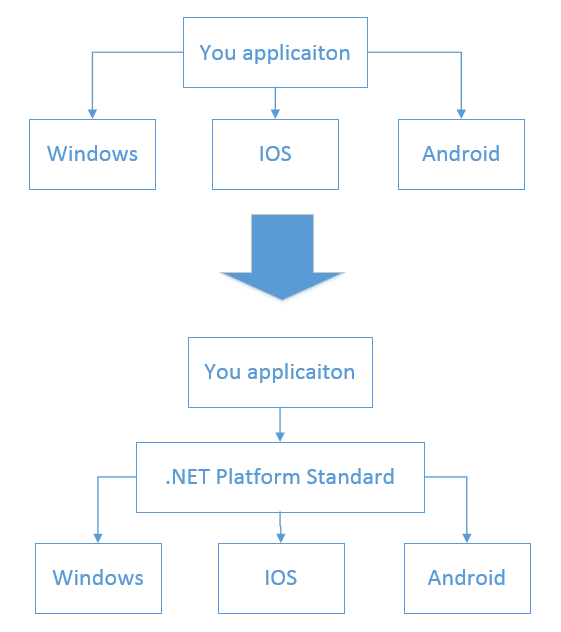

Takeaway #2. .NET Platform Standard defines a set of contracts (APIs) that are supported by different platforms. You can target your application to a specific version of that standard and that means your app will automatically support all platforms that fulfill that contract. Higher version means more APIs but, at the same time, higher versions are supported by fewer platforms.

This is essentially an additional layer of abstraction between your application and the underlying platform. Now, instead of targeting concrete platforms, you can target a certain .NET Platform Standard:

.NET Platform Standard

.NET Platform Standard

The benefit here is that if some new platform or a new version of an existing platform comes along, you don’t have to recompile your application in order to target it. If that new platform implements the .NET Platform Standard you support, your software will run on this platform without your intervention.

If you want to dive deeper into this topic, check out this article.

Test automation without a headache: Five key patterns by Gojko Adzic.

The speaker goes through different kinds of tests and speaks about test automation in general.

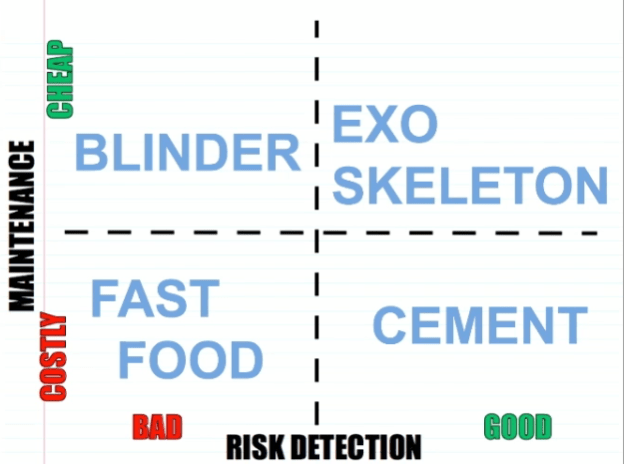

Types of tests

Types of tests

Takeaway #1. Types of tests:

- Fast food testing (costly maintenance and bad risk detection): auto-generated tests;

- Cement: large spreadsheets of raw data verifying that some calculation process returns a correct number. Hard to maintain but scary to delete (unlike fast food tests);

- Blinder: tests with mocks which only verify that you mocked your system correctly;

- Exo skeleton: the type of tests you should aim at creating.

Takeaway #2. The purpose of a good test suite is to enable fast changes.

I would personally add that it’s also confidence that your changes don’t break the system.

Takeaway #3. The speaker corrects the “rule of mocks” given in the GOOS book. Instead of mocking (simulating) the things you own, you should only mock things you completely understand.

The difference is subtle but important: you can understand a 3rd party library really well, so you can omit writing your own wrapper on top of it and mock that library directly. Moreover, the authors of the GOOS book themselves violate that rule in a couple of the book examples because of this exact reason. The speaker also adds that you can mock things you don’t completely understand if they are unlikely to change.

Takeaway #4. Make the connection from inputs to outputs obvious.

- If you use the Given/When/Then notation, don’t combine several When/Then/When/Then together, it’s a cementing test. Make the test with a single G/W/T section.

- To make preconditions and assertions clear in GWT, put givens in the past tense and thens in the future tense. It makes it grammatically nonsense to mess up their order in this case.

Takeaway #5. With auto-generated tests, people try to optimize the process of writing tests, whereas the tests should be optimized for troubleshooting which takes place long after the tests are written.

Takeaway #6. Don’t organize tests around user stories. Organize them around business functionality instead. After a time, it’d be harder to discover tests that are bound to work items. Re-organize for discovery after a feature is completed.

Takeaway #7. Developers should write automated tests themselves, don’t hire separate automation specialists. They can’t keep up with the development, so tests tend to become a mess.

Functional programming in Excel by Felienne Hermans.

Not much to take away from this talk, really, but I still wanted to include it here. This is a fascinating and very entertaining presentation of how MS Excel can be used for functional programming. The way the speaker solved a dynamic programming problem representing it visually in Excel is mind blowing.

.NET Deployment Strategies: the Good, the Bad, and the Ugly by Damian Brady.

Takeaway #1. Adding more governance (people to sign off the release) doesn’t help with deployments, it only adds an illusion of safety but slows the delivery down.

Takeaway #2. Deploy frequently -> Get confidence in the process -> Deploy even more frequently.

Takeaway #3. Instead of making backups, focus on fixing bugs quickly.

- Make reversible changes (transitional deployments) in the database. For example, instead of dropping a column, rename it first, drop after everything is tested;

- Employ feature toggles to test new functionality on few users.

No Estimates: Let’s Explore the Possibilities by Woody Zuill.

The speaker is one of the authors (the author?) of the NoEstimates movement. Nice presentation style, interesting and entertaining to hear.

Takeaway #1. Estimates are generally wrong but if they are right that’s even worse because it means you build a routine and trivial project or you pad the estimates too much.

Takeaway #2. To start the NoEstimates practice, break the task down into understandable cohesive parts and start executing on them without estimations.

I would personally add that management generally don’t care about estimates if you deliver new features quickly enough (within a couple of days). So, focus on widening the delivery funnel instead of measuring the velocity and estimate accuracy.

Takeaway #3. You can find out what something will take only when you start doing it. Before that, you have too many unknown unknowns.

Takeaway #4. The phrase “we cannot improve something until we can measure it” is wrong. We can sense the improvement.

Good point. Technical debt is one of the examples that illustrate it.

Takeaway #5. A complex system designed from scratch never works and cannot be patched up to make it work. All complex systems that work evolved from simple systems that work. Can’t agree more. Over-engineering and upfront design rarely work.

Designing with capabilities for fun and profit by Scott Wlaschin.

The speaker goes through the topic of how good design entails good security model and vice versa. This talk reminded me about the Joe Duffy’s article where he also describes how to eliminate ambient authority and access control and replace it with explicit capability objects.

Takeaway #1. Good security == good design. With good design, it’s easier to provide good security.

The two also have similar perspectives. Good design tells us to minimize our surface area in the code to reduce coupling and dependencies. Good security encourages us to minimize our surface area to reduce the chance of abuse.

Takeaway #2. Security that always says “no” to the developer is sub-optimal as the developer will find a way around. It is better to have a system that allows to easily say “yes” but still have control over what you can do.

Takeaway #3. If you trust someone who has a capability, you automatically trust anyone whom that person trusts or whom that person can be tricked into trusting. Don’t prohibit what you can’t prevent.

Takeaway #4. Centralized authorization systems (based on the user identity) are hard to manage and rigid. Decentralized systems (based on capabilities) easier to manage as they offer more granular control. Centralized systems often lead to credential sharing: a situation when a user gives their credentials to someone else.

Afterthoughts

This kind of post format is new to me. I will probably keep doing it in the future, when new conferences come along or when I encounter enough unrelated but interesting talks which I think are worth sharing. Let me know if you find this format interesting and/or helpful.

Subscribe

Comments

comments powered by Disqus