How I tried to get into game development and failed, Part 2

Part 2 of my story. You can read the first part here.

Working on the tank design

I wrapped up the previous post by saying that we decided to change the game idea from robots to tanks.

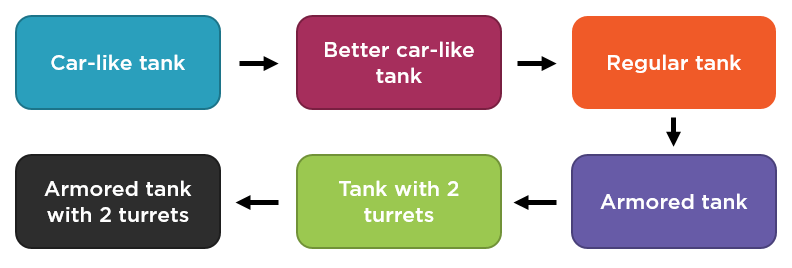

We refined our vision of the game. There were going to be multiple tank levels to introduce a sense of progression. When starting up, you’d have a tank with the least amount of health (hit point, HP) and fire power, and proceed to a tank of the highest level. We decided to create a line of 6 different tanks.

One possible way to make the progression was to assign players experience points (XP) every time they kill someone but we decided that there was a better way. Instead, we made the killed tanks drop stars which the player could collect and thereby advance towards next levels. This would decouple the process of upgrading the tank from the process of killing someone and add more tactical variety. Now it was possible for you to wait until some player shoots another one and then quickly sneak in and pick up his star and finish off the weakened enemy. Which actually happened a lot after we released the game.

We decided to make it more attractive to go after real players by creating two types of stars. A real player’s tank of level 3 or higher would drop a gold star which will give you an instant +1 level. A bot or a lower level player would drop a silver one. You’d need to collect several silver stars to get a new level. Both gold and silver stars fully healed your tank.

In terms of the progression itself, we came up with this plan:

Each level would give you a tank with more HP. Also, starting from the tank with two turrets, you’d have an additional shot in a row.

We thought about assigning different amounts of damage to tanks' bullets depending on the level but decided not to over-complicate things. Also, higher level tanks were strong enough already, there was no need to make them even stronger. And so each bullet incurred the same damage: 1 HP. The difference in armor between tanks of different levels was also only 1 HP, to avoid overpowering the better tanks.

In order to offset the advantage the higher level tanks had over smaller tanks, we made them slower. Also, perks (which appear after you shoot a crate) made a lot of difference too. A smaller tank who picked up several perks had a good chance standing against a more powerful enemy.

Here’s the list of what we came up with:

-

Silver star: advances you towards a higher level, restores HP.

-

Shield: makes you invincible.

-

Fire rate: adds +1 bullet to your salvo.

-

Bullet speed: increases the speed of your bullets.

-

Speed: increases your tank’s speed.

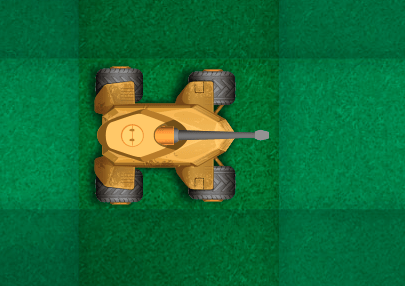

So this was our plan and we started implementing it in code. In parallel with that, the designer started working on the first tank design. And soon, she came up with version 1:

The designer took our suggestion to make a tank with wheels instead of tracks but the result turned out too heavy for the first level. We explained the whole progression concept to her and she soon introduced a new version:

This one was much better but still looked too big. We tried to find a balance in the size of the tanks. On one hand, you don’t want them to be too large as it would make the game feel less dynamic. Larger objects tend to move slower relevant to their sizes and thus look more awkward. On the other hand, you don’t want the tanks to be too small either because that would mean lower resolution and fewer tank details you can show to the player.

After some experimentation, we made the tank a bit smaller and changed its turret. The existing one looked too overpowered for the lowest level. The designer added wheel animation and voilà, we had our first tank ready. She moved on to the second one.

We created a staging environment for the current version of the game so that the designer saw what her drawings looked like in the real game, and updated it each time she released a new tank or changed an existing one.

The art work went faster when the designer understood what we wanted from her. Soon we had the level 2 tank, wall, background, and perks ready:

We modified some things moving forward. For example, we changed the background to make it look less like a football field, altered the color of the walls, and increased them in size. But overall, the design was settled starting from that point.

Working on performance

One of the biggest tasks we had then was performance. We wanted to figure out 2 things:

-

How many users our game could potentially handle, and

-

What is the best way to scale the game out.

With the load similar to what slither.io experienced, there was no chance a single machine could handle everything. Having just a single machine also posed availability problems: one crash could bring the whole game offline. We wanted to know how many servers we would need. And for that, we had to know how many users a single server would be able to take.

So we began our performance tuning adventures.

Let me first describe the requirements we needed to meet with that tuning. Each game arena (also known as game scene) had a cycle during which it handled user input, game objects processing (collision detection, kills, movement, generation of new objects, score assignment, etc.), and broadcasting the game state back to users. This cycle is called "tick" and it fired each 15 milliseconds. So the server was processing the game scene about 66 times a second.

Essentially what we had to do is we had to make sure that the game arena was able to do all these tasks within the 15 ms time frame. Or, rather, we had to find out how many users it could handle without exceeding the 15 ms limit. After we’d get this number, we would then measure how much of the server’s CPU such arena takes and figure out how many game arenas each server can support. That would give us a nice formula to calculate the number of servers needed to handle a given number of users.

What was interesting about this performance tuning is that we didn’t actually need anything other than CPU and a clean and performant network channel. Everything resided in the memory, and we didn’t have any sort of database, so the requirements for the hard disk were minimal. As for the RAM itself, we didn’t need much of it either, the whole game scene required only about 100 KB of the memory space. That opened some interesting hosting options which I’ll write about later.

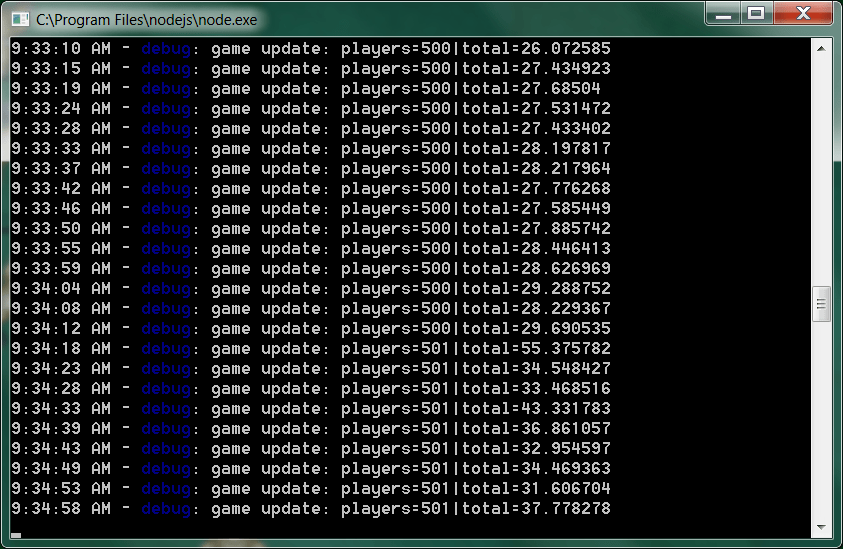

So we’ve set up some logging and went ahead and added 500 bots to the game scene. Here’s what we got:

On this screen shot, you can see the total number of players (500 bots + 1 user) and the total time one tick took. The timing was way beyond the target 15 ms. But that wasn’t the only issue. You can notice that there’s a clear trend on the screen shot: the timing goes up.

Our first thought was that there’s some leak going on. After some investigation, though, it turned out that it was not the case.

We had an issue with collision detection. The game checked collisions for each tank with all other tanks, even if they were on the opposite sides of the map. So the processing time was a quadratic function of the number of tanks in the game:

Tick_processing_time = num_of_tanks ^ 2

And it actually was even worse. That increase we first attributed to a leak of some sort was in fact due to a rising number of walls. When the game started, it didn’t add all walls at once, it introduced them gradually, hence there were more objects to check collisions with over time. And as the number of walls was much greater than the number of tanks, their contribution to the overall processing time also was much bigger.

The dependency looked more like this:

Tick_processing_time = (num_of_tanks + num_of_walls) ^ 2

So even if we were to cut the number of tanks per game scene by 2, it wouldn’t help much. To make the current logic work as is, we would have to both reduce the number of tanks per arena and introduce some cap for the number of walls, and that cap would be pretty small.

Clearly, there had to be a better solution to handle this situation. And there indeed was.

A common way to get rid of the quadratic dependency is to divide the game arena into sectors and check collisions only within each sector. This way, tanks or walls that are far away from each other wouldn’t be considered for the collision check. Which was exactly what we needed.

The function would look like this after the change:

Tick_processing_time = (num_of_tanks_in_sector + num_of_walls_in_sector) ^ 2 * num_of_sectors

Which is a much better proposition in terms of the amount of work the server has to do. Let’s say for example that the arena contains 50 tanks and 50 walls (the real ratio between them is not 1:1 of course). With the old approach, the server would need to perform 100^2 = 10,000 checks. But if you divide them all into groups of 10, then the number of checks needed would be 10^2 * 10 = 1,000. A factor of 10 difference! And it would be even more should you make sectors smaller.

Of course, the downside of this approach is that you need to track the position of each game object and assign it to a new sector if it changes. And that means you can’t make the sectors indefinitely small as this would mean more work for re-assigning tanks and bullets to new sectors. So there should be a balance. You need to pick a sector size which is both not too large and not too small.

Also, when checking for collisions, you need to take into account objects from adjacent sectors because two tanks could potentially be on the edges of sectors that are next to each other and thus still collide. That didn’t change the overall picture much, though.

So this is what we did. After some experimentation, we ended up with the sector size of 150 (whereas the 1st level tank size is about 60 and the arena size is 11000).

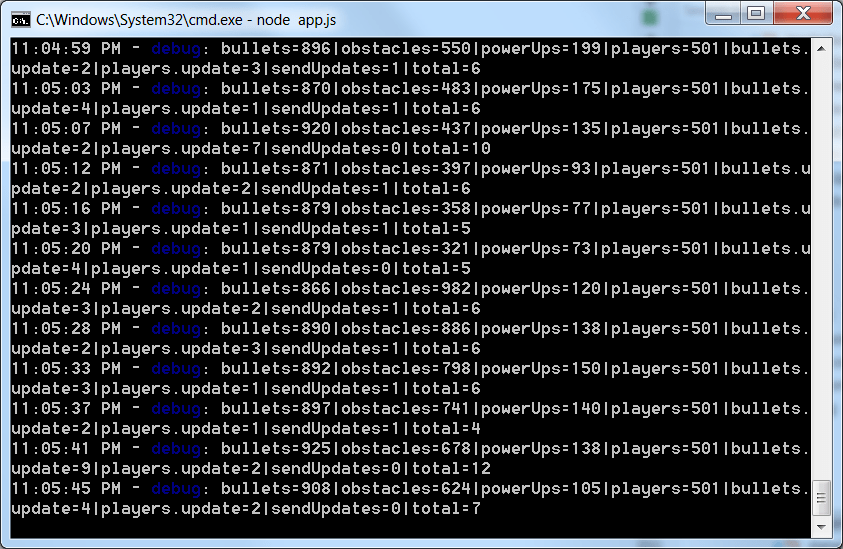

The performance gain was spectacular:

As you can see, with about 2,200 objects on the arena (bullets, obstacles which are either walls or crates, power ups, and tanks themselves), the game took only about 10 ms to process a tick. Which is a couple orders of magnitude less than before.

Alright, that was a nice performance gain but the environment in which we conducted the experiments was not quite close to what we would get in production. 500 of those players we populated the scene with were bots, not real users. And that meant the server didn’t send them any updates about the game state. There was only one client who received those updates.

So we performed another test. We deployed the latest version to staging and modified the client so that instead of one WebSocket connection, it created 200. We then both connected to the server to see how the server handles 400 connections. We did this test for both Linux and Windows machines.

The results were interesting.

First of all, it was a surprise for me personally that there was no difference in performance between Windows and Linux. For some reason, I thought that Linux (Ubuntu in our case) should have been more performant than Windows, just because it’s Linux. But - at least for our application - NodeJs showed exactly the same numbers for both VMs.

Secondly, sending updates to real users took the server a lot of time. With about 400 connections, a tick took roughly 12 ms, and 10 of them were spent on this single task. We tried to experiment with our code to see if the issue was on our side but it wasn’t. It looked like NodeJs genuinely spent the majority of time sending those messages.

That also was surprising because NodeJs should do such things asynchronously. But it seemed that at least part of this work was done in the main thread, hence such numbers.

It wasn’t that big of a deal as it still was within our 15 ms limit. But we took some steps to improve the numbers anyway. We already had some optimizations in place related to sending updates. For example, the game didn’t send the state of all game objects to each player, only those that player could see. And it also didn’t send them on each tick, the game did that on each 5th tick. That’s because we had a great extrapolation algorithm on the client (which I wrote about in the last post). This algorithm could show smooth movement for game objects even with fewer data points, there was no need in broadcasting the game state that often. And so we spared the server CPU cycles by sending that data less frequently.

The problem here was that all that work was performed in a single tick. So for the first 4 ticks, the server only checked the collisions, processed user input and moved the game objects, but didn’t send any updates. And on the 5th tick, it did all the work above plus it also sent the updates. The difference in processing time between the 5th and all other ticks was tremendous.

So what we did in addition to the existing optimizations was we distributed the load between all 5 ticks. The server sent updates to the first 1/5 players on the first tick, the second 1/5 on the second, and so on. This allowed us to even the processing times.

Eventually, we figured that each game scene could handle up to 200 players while still processing everything within the 15 ms time frame. Each such arena was hosted in a separate OS process and took about 30% CPU time on a D1 Standard Azure VM. So we could run 3 game arenas on a single VM and the overall number of players that VM could keep up with was 600. Which was pretty good.

By the way, you may wonder which WebSocket library we used. It was ws. We considered using socket.io but decided not to do that. Socket.io has some nice features: it has several communication protocols in addition to WebSockets and so if the client browser didn’t support WebSockets, they still could connect to your game by falling back to other protocols, such as HTTP long polling. But we decided that we didn’t need that. WebSockets had become a standard already and the vast majority of browsers implemented it. Also, ws is closer to the bare metal and thus has less potential overhead in terms of both the learning curve and performance.

In the meantime, the designer was working on the game too:

Here you can see new art: a force shield, a bullet and a wooden box/crate (which you need to shoot in order to get perks).

Conclusion

Alright, that’s it for today. In the next episode, I’ll write about client side load balancing, new tanks, and, finally, game release.

- ← How I tried to get into game development and failed

- How I tried to get into game development and failed, part 3 →

Subscribe

Comments

comments powered by Disqus