Short-term vs long-term perspective in software development

In this post, I’d like to talk about what I think is one of the most damaging attitudes a company or a person can have in the field of software development: short-sighted perspective.

Short-term perspective in the real world

In an ideal world, we all would make choices that benefit us in the long haul. We wouldn’t eat out at night, exercise regularly, constantly improve our skills, etc. Unfortunately, we don’t always prioritize the long-term goals these activities bring us. In fact, we often do the opposite of that.

This makes perfect sense when you look at it from the evolutionary biology perspective. We as humans are evolved this way. When you sit in a cave and don’t know whether you are going to have anything to eat tomorrow, it’s a no brainer to pig out at the first opportunity and do that as often as possible. On the other hand, it also makes sense not to waste your energy on thinking unless you have an emergency. It’s a matter of survival.

The problem, however, is that in the today’s world, this behavior is not perfectly aligned with our long term goals. Taking an excessive amount of calories doesn’t equate to one’s well-being anymore. The same is true for wasting time on brainless activities. We all still have this tendency, though, because we just haven’t had enough time (and probably will never have) to evolve further to cope with the changed environment.

This, without exaggeration, is one of the biggest struggles of the modern society. It’s no wonder that the ability to fight the ancient impulses and delay gratification is one of the best predictors of success in life.

Short-term thinking in software development

When it comes to software development, the situation is different but the dichotomy between short-term and long-term perspectives is there nonetheless.

One of the most infamous examples of it is Technical Debt - the metaphor coined by Ward Cunningham. It’s often easy to get the work done and make the software do what you want. It’s much harder to do the same in a way that doesn’t impede your ability to get the work done in the future. Crappy design decisions tend to pile up. And they decrease your programming capacity over time. When those decisions are deliberate (as in "We don’t have time for design"), they become a good example of prioritizing short-term goals (getting the current work done quickly) over long-term ones (getting the future work done quickly).

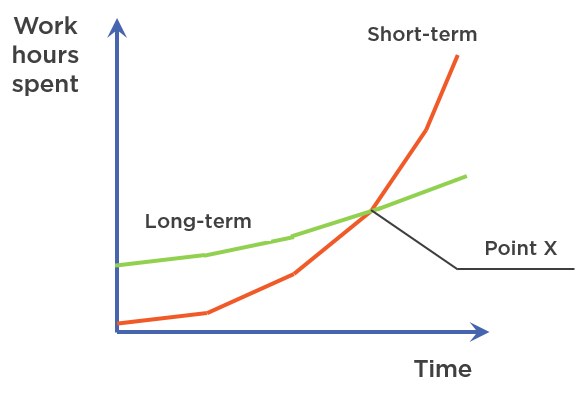

This whole tendency can be summarized with the following diagram:

If your planning horizon is short (for example, you know that the software under development will not be actively developed in the future), you are good having the short-term perspective. In fact, you are better off this way because the total amount of hours spent will be lower than it would be should you adhere to "proper" design practices. However, if your planning horizon is far beyond the so called Point X, you are screwed.

Note that the short-term perspective is not necessarily harmful per se. It only becomes harmful when it contradicts your long-term plans. When the technical debt is a calculated, prudent choice, the short-term gains you get out of it align well with your long-term goals. There’s no use in a well-designed software in the long run if failing to ship it early means bankruptcy for your company. No design, however good it is, will help that company to get back to life.

That’s right, technical debt can be both beneficial and harmful to your long-term goals.

Short-term vs long-term focus on the learning curve

Technical debt is not the most interesting example of this dichotomy you can see in software development, though. The most interesting one is in where companies tend to put their focus in terms of the learning curve.

Let me explain what I mean by that.

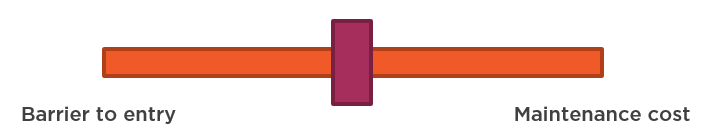

When it comes to using a piece of software, there’s a dichotomy between how easy it is to start using it (barrier to entry) and how easy it is to continue using it when you get familiar with the technology (maintenance cost).

It’s often the case that the lower the barrier to entry, the more effort the technology requires from you to keep using it in the future. It works like a slider:

The more emphasis you put on lowering maintenance costs, the harder it becomes to learn for newcomers. Likewise, reducing the barrier means higher maintenance costs. Great software helping you solve complex problems always requires you to put some effort upfront in order to learn it before you become productive with it.

So what you can see is that vendors try to attract new clients by lowering the bar. They try to do all the hard lifting themselves and hide the underlying complexity from the customer. It works great for a while and you are usually able to start doing some useful stuff quickly. But after that initial period of time, when you try it in more complex scenarios, it starts to fall apart.

Microsoft is especially notorious for that. ASP.NET WebForms was an attempt to attract desktop developers to the Web and make it seem as though web development is very similar to the desktop one. It completely ignored the difference between stateless and stateful models. It worked great at the beginning until you tried to do more sophisticated tasks with it. Want to refer to a control by its Id in JavaScript? Nope, can’t do that. Render this JavaScript on the server side because all client ids are also generated on the server. What to put more than one form on a page? Nope, can’t do that either.

Entity Framework 1.0 was another example. It made object-relational mapping look easy by introducing drag-and-drop development and thus lowered the barrier to entry for newcomers but the cost of maintaining the resulting beast was overwhelming for any more or less complex project.

And the list goes on and on.

What’s the reason of this continuous failure to combine low maintenance costs with the low barrier to entry? It’s all because of the Law of Leaky Abstractions. You can’t hide a non-trivial system from the eyes of the users completely, they will have to deal with it sooner or later in one form or the other. And it’s better to help them do that by educating them and thereby increasing the bar than it is to pretend that system doesn’t exist.

To be fair, Microsoft started to depart from this attitude recently. Their focus on open source and the feedback the community provides goes a long way.

So, what’s the takeaway here? Focus on the future maintenance cost first. Try to make it as small as possible. And if you are able to still keep the barrier to entry low - great. If not, don’t sacrifice the future needs for this brief learning period, it just doesn’t worth it.

And if you are a programmer, don’t rely on the technology too much. Try to understand everything at least one level of abstraction below what you are doing currently. If you are a web developer, understand how HTTP/OAuth/etc works, don’t simply count on your framework. Work with SignalR, SocketIO? Take time to dig into the WebSockets protocol and HTTP long polling. Use an ORM? Learn the underlying database.

This understanding will help you tremendously when you will try to troubleshoot an issue or make a choice between similar technologies.

Summary

-

It’s often impossible to lower both the barrier to entry for a technology and the future maintenance costs associated with using it.

-

Prioritize maintenance costs over the barrier to entry.

-

Understand everything at least one level of abstraction below what you are doing currently.

Subscribe

Comments

comments powered by Disqus