How we think

Did you think about how we think? How do we come up with a solution and how we decide whether it’s good or bad? It seems like a very interesting topic, so let’s dive in!

How we think: a common belief

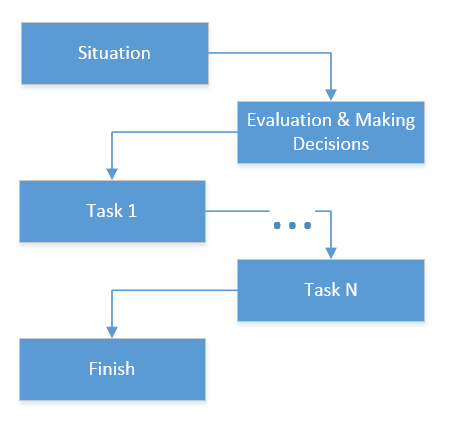

One of the popular beliefs is that our thought process is very similar to a computer program: when we decide to create something - a software, an article, etc. - we just separate the total amount of work into pieces and implement them step by step. The thinking itself takes place at the very beginning here, i.e. before we start to fulfill the tasks.

While that might be the case for simple tasks in which we do know what we should do ahead of time, it most cases the creation process is completely different: we try to do something with a plan in mind, we encounter unexpected difficulties and start over with a new plan.

That is why agile techniques shine: they make the process of changing plans by constant evaluation and making new decisions a first-class citizen in our day-to-day work. Instead of pretending that we can predict any change that can happen with a software project, we now assume we know very little about how it should look like and thus should include regular learning and re-evaluation in our development process.

I’d like to focus on the "Evaluation & Making a Decision" step of the diagram as it is where our process of thinking takes place.

So, how we think?

Many years ago, when I went to school, I noticed at one of my painting classes that while I can remember a picture and then recognize it among the others, I can’t just sit and paint it right away. Although the process of recognizing always goes smoothly, the process of recreating a picture out of the memory takes much more time and effort.

It seemed that these two distinct actions - memorization and recreation - are not symmetric. And, as I learned afterward, they really aren’t.

It turned out that when we memorize something, be it a picture, a chess board layout, or a software architecture, we don’t save it in our memory as is. What we do save is a hash of it. That is, if there was some method which allowed us to retrieve any information out of our brain, all it would give us is a set of information that is totally irrelevant to the memorized objects.

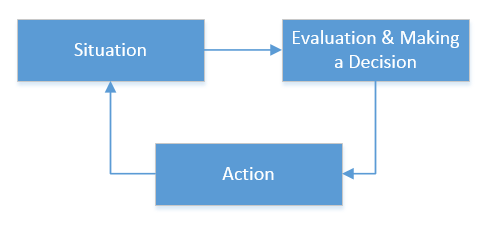

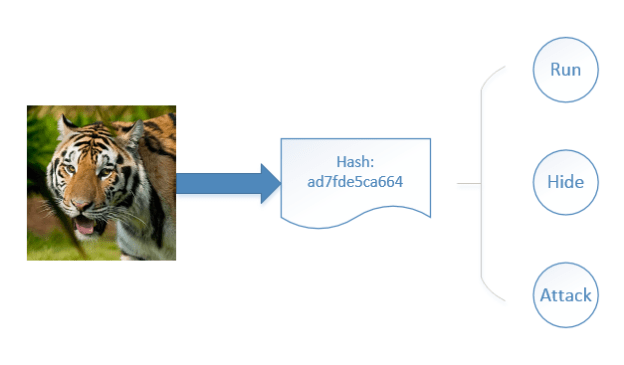

When we see an object or encounter some situation, our brain first converts it to a hash and then uses pattern matching to identify it. Each of hashes saved in our memory has some action items attached to it: the experience gained during our life. We use those items to decide how to react to a particular stimulus:

In the course of time, evolution optimized our mentality making it extremely easy to memorize and then recognize a visual image. On the other hand, the reverse operation wasn’t used at all. It was life-and-death to quickly recognize a tiger, but painting tiger on a rock certainly wasn’t so important.

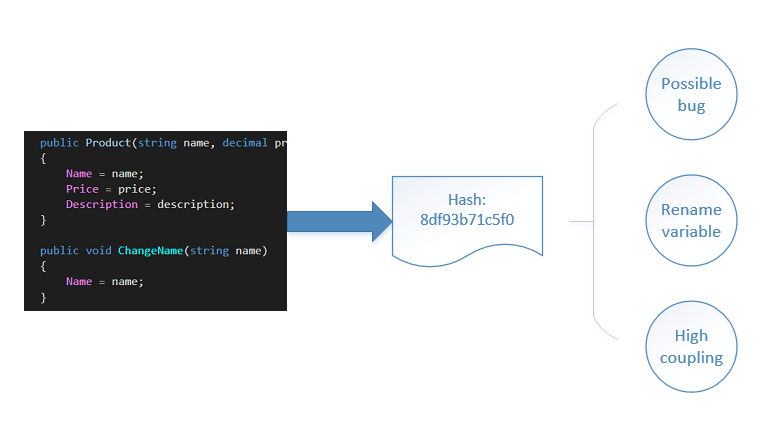

That said, our mentation is inherently nonlinear. Whether we code or draw a picture, the process of thinking remains very similar. First, we see a picture or a code sample written so far, then, we search for similar samples in our knowledge base. After that, we look for the action items attached to it and make a change according to one of them. Then, the process repeats.

You might have noticed that when you write code, you can’t see how exactly your code will look like. That is true even if you solve simple tasks because that is how our brains work: you constantly re-evaluate the situation and if there are any action items attached to it in your knowledge base, you implement them.

Learning by example

All said above has an important corollary: "outside in" learning doesn’t work. You can’t learn OOP principles without writing a program using classes and you can’t learn functional programming principles without writing a program using a functional language.

I remember how I studied math in university. A professor gave us a lecture describing the details of, say, combinatorial analysis and of course we didn’t get a word. After that, we had a workshop in which the professor explained how to solve particular problems applying the theory he taught us at the lecture and all of the sudden, the theory started making sense.

Only after we were given several specific problems and solutions to them did we start to understand the theory behind those solutions. Now it seems clear that it would be much more productive if we had started with some minimum amount of theory, switch to practice and only after that proceed to more in-depth lectures.

We learn by example. They form our knowledge base - set of hashes in our memory. Experience and practice help us attach action items to those hashes: what steps we should take to solve a concrete problem or improve a concrete solution. We use those hashes as building blocks to make generalizations. We derive general rules by grouping several separate facts by their similarity or by action items attached to them.

Did you think about how mathematicians prove theorems? In most cases, they are already sure (or have a reasonable suspicion) that the theorem is correct. They infer that suspicion from a bunch of facts they know. The difficulty then, is to come up with a formal proof, not with the idea of the theorem itself.

Power of our intellect can be represented as speed and accuracy with which we can search in our knowledge base. On the other hand, experience is the knowledge base itself: it represents how many hashes we store in memory and how many action items are connected to them.

Human versus computer

It is interesting to compare human and computer intelligence. Let’s take chess as an example of clashing these two completely different types of thinking.

The basic algorithm behind a chess program is to look at several (10 or even 15) moves ahead and crunch all possible positions trying to find the best move. Of course, we can’t do such thing. Moreover, if you try to mimic a chess program and find all the moves in advance, you will inevitably fail.

We are not computers, we think in a completely different way. Do you know how Kasparov managed to beat Deep Blue (then the best chess program) back in 90’s? He didn’t try to think ten moves ahead, that is simply impossible. What he did is he recognized concrete patterns on the board and acted accordingly the action items from his huge knowledge base.

I see only one move ahead, but it is always the correct one. – Jose R. Capablanca.

Another telling example is Go game. It is one of the few board games in which top computer programs can’t get even close to top human players. The point here is that the tree of possible moves in this game is much wider than in chess. That means that the classic exhaustive search algorithms used in chess programs surrender to human’s memorization and pattern matching method.

One possible way to take situation over would probably be to mimic the human’s process of learning: build a neural network and train it with as large knowledge base as possible, but I’m not familiar with such initiatives.

Conclusions

What resume can we conclude from this? The key to becoming a master in something is to absorb as many separate facts (hashes) and implications of those facts (action items) as possible. There’s no universal algorithm for that, only hard work of learning by examples.

Great chess masters become great not because they think ahead more moves than others, but because they store a huge knowledge base in their memory. Similarly, great software developers become great when they get to know almost all of the problems they might encounter while developing software. Software design principles help generalize that knowledge base but can never substitute it.

Subscribe

Comments

comments powered by Disqus